2023-04-02

TL;DR: I built a network-attached storage server out of a netbook and a USB hard drive bay. The system works well, and the hardware was inexpensive. I used ZFS to provide redundancy and efficient offsite replication.

Everybody should have backups for the data they’d rather not lose. A wonderful advantage that the digital world has over the physical is that making copies of stuff is usually trivial. Can you copy your house? Your cat? Your left pinky toe? Of course not, but you can copy photos of all those things as many times as you care to, provided you have enough space and time1.

But why make backups at all? Three big reasons:

Data backups mitigate against all of these. That’s probably not news to you, but it’s one of those things that “everyone knows” and yet few people seem to do. Until this project, I didn’t have a good backup solution, so I was just hoping that I’d make it through if something happened. Fortunately, nothing did. And the truth is that I could still lose data even after finishing this project. The point is that my chances of unrecoverable data loss have gone from “meh, probably low unless I do something stupid or have bad luck” to “comfortably low in all practical circumstances”.

The first place I went when I started this project was pcpartpicker.com. I knew I wanted to use ZFS and Debian, which seemed like stable choices, so I just needed some hardware to put them on and get going. I’ve never built a proper motherboard-and-tower PC before, so I did a lot of book learning here.

I read in several places that ECC RAM is important for robust ZFS installations3, so restricted myself to just those components that would support that constraint. Turns out there aren’t a lot of options (at least where I was looking), and the ones that exist are noticeably more costly than their non-ECC counterparts. I suspect that if I looked somewhere other than a gamer-focused parts site, then maybe I’d find something more reasonable, but I’d probably also pay some general markup for “server-grade components” that I don’t need for this project.

After a lot of fiddling with filters, I made a respectable build for a budget ECC NAS4, but as of writing the total price is around $500 without drives. From what I can tell, this is a typical result in this space, so I probably shouldn’t be surprised. (I was surprised anyway.) I had budgeted around $500 for this project in total (including drives), so I needed something else.

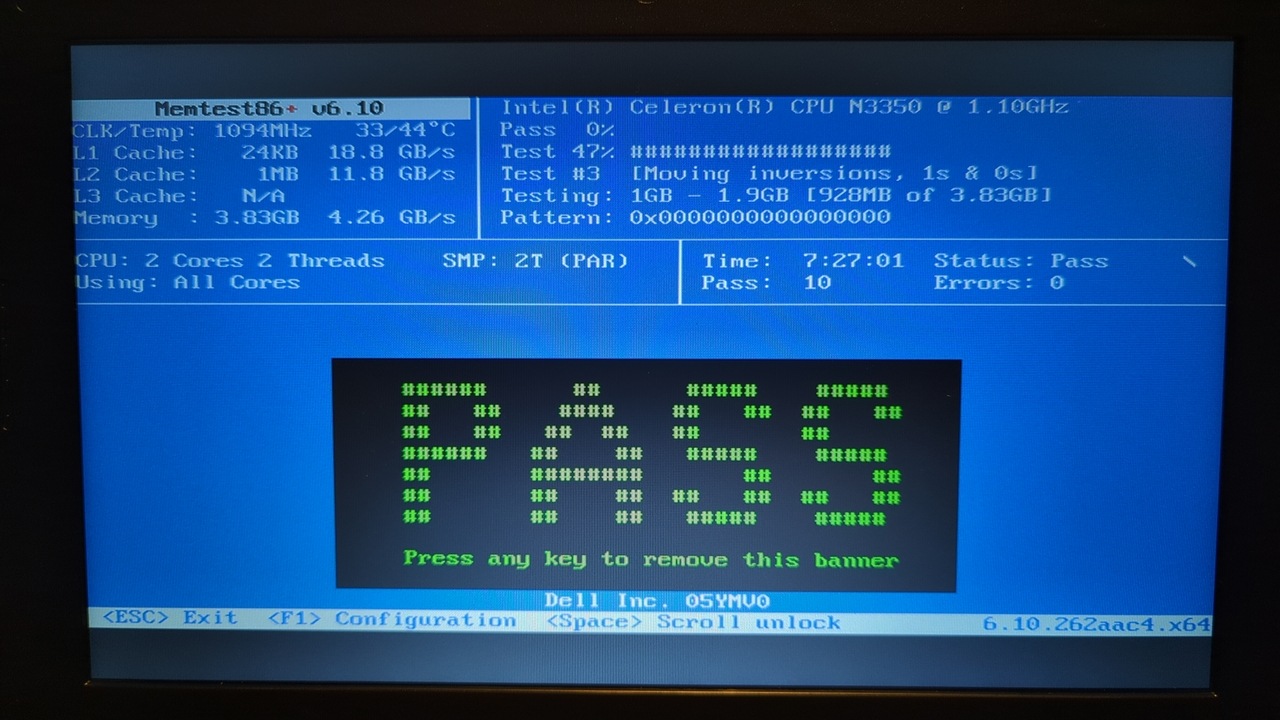

I started questioning the necessity of ECC RAM. It seems like there’s a lot of uncertainty in the true prevalence of random bit flips in otherwise working memory. Some sources indicated that the most common causes of memory issues are bad hardware and bad connections, and both of these can be detected reasonably well using memory testing software. In the end, I took a chance and went with non-ECC memory, and this reduced the cost of the components significantly.

Once I removed the ECC RAM restriction, I realized that I had a Raspberry Pi 3 laying around that might already have the capabilities that I needed. I had been considering direct SATA connections to the drives, but modern USB can provide enough bandwidth for my purposes, so it seemed like a reasonable approach to try. I got as far as installing Debian and ZFS before I saw this video where RobertElderSoftware tried this exact setup. While the arrangement did work, the transfer speeds were unacceptably slow because the Raspberry Pi 3 only has USB 2.0 ports, which don’t support High Speed. The Pi 4 would work better, but of course those are hard to find.

This led me to consider Intel NUCs, which I view as simply more powerful RPis with x86 processors. This probably would have worked fine, but it seems like there’s a kind of “Intel tax” attached to these products. These SBCs are quite popular, so demand is probably pretty high. Add to that the lack of input and output devices, and the price was creeping up again. I would have needed to buy a monitor and keyboard, which wouldn’t have been too bad, except that I’d only need them once to configure the system. Then I’d be stuck with hardware that I didn’t really need unless something went wrong.

UPDATE 2024-04-02: I was wrong about this, or at least I got unlucky. The netbook I bought seemed to suffer a failure of its eMMC storage device after a few months of service, so it became unbootable. (The BIOS doesn’t even see it.) I can still live boot, for what that’s worth, but it’s not a good choice for a backup machine anymore. This is a funny outcome because the RAM still seems to be in perfect condition, which is consistent with the testing I performed. I ended up snatching up an old tower from a family member who was upgrading—one of the perks of being the family IT department—and have been using that since a few weeks ago. So far, no problems, although I don’t get my nice built-in keyboard, display, and trackpad anymore. I’m going to leave the original text of this section as-is, but keep these later developments in mind. Apparently, you get what you pay for.

A netbook is a small laptop that can’t do much of anything except provide a minimal interface to the outside world. They’re not too common anymore, which I suppose is a result of “real” laptops becoming more affordable and less optional, but their spirit lives on in Chromebooks and the stalwart members of the lowest-budget laptop category. It turns out that netbooks—particularly refurbished ones—provide a cost-effective solution to the exact problem I faced in this project. In other words, a decent netbook can also be a cute all-in-one server for the not-too-picky sysadmin.

Of course, if you happen to be a real sysadmin, you probably think this is lunacy. After all, depending on refurbished low-end hardware for data backups isn’t exactly rigorous. And you’re right, but consider my threat model. My main concern is data recovery in the case of a complete loss of my primary machine (due to theft, hardware failure, the singularity, etc.), and my secondary concern is recovery of single files or directories when I accidentally delete something. Bonus points if I can serve media and files on my LAN and don’t have to worry about storage for a while. These are not the same concerns as a business, such as the one where you, dear sysadmin, probably work. If you brought my design to your boss, you’d get us both fired5. In my case, cheap hardware and a friend willing to host an offsite replica are sufficient to address all of my concerns and bonus points above.

And think of the convenience. With a netbook, you don’t just get a motherboard with all the usual dressing. You also get an integrated keyboard, trackpad, display, and a battery backup system. As far as I can tell, it’s not possible to find this kind of value in traditional server hardware outside of “everything must go” firesales. Most have USB 3.0, so the bandwidth issues I faced earlier are not present here. The power consumption is decent as well6. Refurbished products that fit this description are common on eBay for under $100.

And that’s exactly what I bought. For less than $200, I had a Dell Latitude 3180 and an Orico USB drive bay. All I needed were some drives7, and it was all configuration after that.

UPDATE 2023-12-14: One of my drives was consistently overheating in this drive bay, which seems to be because that particular drive is about the same size as its slot. This prevents airflow to the drive and probably reduces its ability to disspate heat. The drive and slot are both about 25 mm thick, so I recommend only using thinner drives with this bay.

I ran ten passes of memtest86 without any errors, which I consider to be sufficient evidence that I won’t have significant issues with this RAM. I’m willing to tolerate a tiny amount of bitrot over the lifetime of this NAS.

As for the USB bandwidth, it doesn’t seem to be a problem. Using a single bus to access multiple drives (rather than individual SATA cables to each drive) slows things down during writes because the system has to write redundant blocks to multiple drives by design, and all the drives share a pipe. Reads seem unaffected, which makes sense because only one drive should respond in the base case of a healthy block. For the price difference, I’m willing to accept this performance reduction as well.

Once I had the hardware, OS, and primary software in place, the rest of this project amounted to configuration and data transfer. I had a few drives scattered here and there that contained old backups and other data, so for a few days I’d log in periodically and kick off a new transfer. I used a RAIDZ-1 configuration across three drives, so I had two spare drive slots in the external bay, which made the transfers pretty easy.

I set up a daily anacron job on my laptop to push any

changes to the data on my local disk up to the NAS using

rsync. I considered switching to ZFS on my laptop as well

so I could use zfs send, but it seems like setting up ZFS

as the root

filesystem on Debian takes a lot of effort and might be unreliable.

anacron comes in handy here because my laptop isn’t running

all the time, and that’s the exact problem that anacron

solves. I also set up an ordinary cron job on the NAS to

scrub the ZFS pool once a week. With about a terabyte of data in the

pool at the moment, the scrub operation takes about 100 minutes.

I mentioned offsite replication above—I actually haven’t set that up

yet. My good friend Logan Boyd

has agreed to build a similar system to mine, and once it’s set up we’ll

use zfs send between the systems to replicate our ZFS

datasets. OpenZFS supports dataset encryption, so we’ll be able to send

each other encrypted blocks and maintain our privacy while still

providing an offsite replica of the data. In a recovery scenario, all

we’d have to do is unlock the encrypted replica. The systems will each

run on our local home networks, so we’ll have to set up port forwarding

in order to access the systems over SSH. We won’t have root

on each other’s systems, so we can protect our own local datasets with

ordinary Linux filesystem permissions.

I also wrote a program to monitor this NAS build and send me Telegram notifications when any of a few different conditions are met, such as an SSH login from a new IP or a change in the health status of a ZFS pool. You can find that program here.

I guess you can copy all of those physical things, too, but my point is that it’s typically easy to copy digital stuff, while for physical stuff it’s usually a challenge limited by resources and available technology.↩︎

There’s the physical world again, ruining everything. Stupid entropy.↩︎

ECC means Error-Correcting Code. ECC RAM is like regular RAM, except it has an extra parity bit for every eight bits of data. The RAM module can use this parity bit to detect single-bit errors in memory, provided that the rest of the hardware supports it. In theory, this is important for all kinds of computing, but in practice it isn’t all that common outside of server hardware because bit flips in memory aren’t a usually a problem for everyday applications.↩︎

I was planning to 3D print a case, which is why the build doesn’t have one.↩︎

Which would be impressive, considering we probably don’t even work for the same company.↩︎

Which makes sense because these kinds of machines aren’t setting any FLOPS records, but that’s fine for me because I just need to be faster than the attached HDDs, which isn’t too hard.↩︎

Yes, these are SMR drives. This is another price optimization that hasn’t been an issue for me (yet).↩︎